How To Create Ground Truth Data With Rcnn

INSTANCE SEGMENTATION | DEEP LEARNING

Mask RCNN implementation on a custom dataset!

All incorporated in a unmarried python notebook!

What is Example Segmentation?

Case sectionalization is the role of pixel-level recognition of object outlines. It's one of the hardest possible vision tasks relative to equivalent estimator vision tasks. Refer to the following terminologies:

- Classification: There is a horse/man in this image.

- Semantic Segmentation: These are all the horse/homo pixels.

- Object Detection: There are a horse and a human in this epitome at these locations. We're starting to account for objects that overlap.

- Instance Segmentation: There is a horse and a human being at these locations and these are the pixels that belong to each i.

Yous can learn the nuts and how Mask RCNN really works from hither.

We will implement Mask RCNN for a custom dataset in just one notebook. All you need to do is run all the cells in the notebook. We volition perform elementary Horse vs Man classification in this notebook. You can change this to your own dataset.

I accept shared the links at the terminate of the commodity.

Let'south begin.

1. Importing and cloning repositories.

First, we will clone a repository that contains some of the building code blocks for implementing Mask RCNN. The function copytree() volition go the necessary files for you. After cloning the repository we volition import the dataset. I am importing the dataset from my drive.

!git clone "https://github.com/SriRamGovardhanam/wastedata-Mask_RCNN-multiple-classes.git" import shutil, bone def copytree(src = '/content/wastedata-Mask_RCNN-multiple-classes/primary/Mask_RCNN', dst = '/content/', symlinks=Simulated, ignore=None):

try:

shutil.rmtree('/content/.ipynb_checkpoints')

except:

laissez passer

for particular in os.listdir(src):

due south = os.path.join(src, particular)

d = os.path.join(dst, item)

if os.path.isdir(south):

shutil.copytree(s, d, symlinks, ignore)

else:

shutil.copy2(s, d)

copytree()

shutil.copytree('/content/drive/MyDrive/MaskRCNN/newdataset','/content/dataset')

Let'south talk nearly the dataset now. The dataset that I imported from my bulldoze is structured as:

dataset

--train

----21414.JPG

----2r41rfw.JPG

----...

----via_project.json --val

----w2141fq2cr2qrc234.JPG

----2awfr4awawfa1rfw.jpg

----...

----via_project.json

The dataset that I take used, is created from VGG Image Analyst (VIA). The notation file is in .json format which contains the coordinates of all the polygons which I have drawn on my images. The .json file volition look something like this:

{"00b1f292-23dd-44d4-aad3-c1ffb6a6ad5a___RS_LB 4479.JPG21419":{"filename":"00b1f292-23dd-44d4-aad3-c1ffb6a6ad5a___RS_LB 4479.JPG","size":21419,"regions":[{"shape_attributes":{"proper noun":"polygon","cx":83,"cy":177,"r":43},"region_attributes":{"name":"Equus caballus","image_quality":{"good":truthful,"frontal":truthful,"good_illumination":true}}},{"shape_attributes":{'all_points_x': [1,2,4,5], 'all_points_y': [0.two,2,five,7], 'name': 'polygon'},"region_attributes":{"proper name":"Homo","image_quality":{"practiced":truthful,"frontal":true,"good_illumination":true}}},{"shape_attributes":{"name":"ellipse","cx":156,"cy":189,"rx":19.3,"ry":10,"theta":-0.289},"region_attributes":{"name":"Horse","image_quality":{"adept":true,"frontal":true,"good_illumination":truthful}}}],"file_attributes":{"caption":"","public_domain":"no","image_url":""}}, ..., ...}

Note: Although I have shown more than one shape in the to a higher place snippet, you should only utilise one shape while annotating the images. For instance, if you cull polygons as I have for the Horse vs Homo classifier then you should comment all the regions with polygons but. We will discuss how y'all tin use multiple shapes for annotations afterward in this tutorial.

2. Selection of right versions of libraries

Note: This is the most of import footstep for implementing the Mask RCNN architecture without getting any errors.

!pip install keras==2.ii.5

%tensorflow_version 1.x You will confront many errors in TensorFlow version ii.x. Also, the Keras version 2.ii.v volition save us from many errors. I will not get into detail regarding which kinds of error this resolves.

iii. Configuration co-ordinate to our dataset

First, we volition import a few libraries. Then we will give the path to the trained weights file. This could be the COCO weights file or your last saved weights file (checkpoint). The log directory is where all our data volition be stored when grooming begins. The model weights at every epoch are saved in .h5 format in the directory so if the training gets hindered due to any reason yous tin ever starting time from where you left off past specifying the path to the last saved model weights. For instance, if I am grooming my model for 10 epochs and at epoch 3 my training is obstructed then I volition have 3 .h5 files stored in my logs directory. And at present I do not accept to start my training from the beginning. I tin just change my weights path to the concluding weights file e.one thousand. 'mask_rcnn_object_0003.h5'.

import bone

import sys

import json

import datetime

import numpy every bit np

import skimage.describe

import cv2

from mrcnn.visualize import display_instances

import matplotlib.pyplot as plt

# Root directory of the project

ROOT_DIR = os.path.abspath("/content/wastedata-Mask_RCNN-multiple-classes/main/Mask_RCNN/") # Import Mask RCNN

sys.path.append(ROOT_DIR) # To find local version of the library

from mrcnn.config import Config

from mrcnn import model as modellib, utils # Path to trained weights file

COCO_WEIGHTS_PATH = os.path.join(ROOT_DIR, "mask_rcnn_coco.h5") # Directory to salve logs and model checkpoints

DEFAULT_LOGS_DIR = os.path.join(ROOT_DIR, "logs") grade CustomConfig(Config):

"""Configuration for training on the dataset.

Derives from the base of operations Config grade and overrides some values.

"""

# Give the configuration a recognizable name

Proper noun = "object"# We use a GPU with 12GB memory, which can fit two images.

# Number of classes (including background)

# Adjust downwardly if you use a smaller GPU.

IMAGES_PER_GPU = 2

NUM_CLASSES = 1 + two # Background + (Horse and Man) # Number of training steps per epoch

STEPS_PER_EPOCH = 100 # Skip detections with < 90% confidence

DETECTION_MIN_CONFIDENCE = 0.ix

CustomConfig class contains our custom configurations for the model. We simply overwrite the information in the original Config grade from the config.py file that was imported earlier. The number of classes is supposed to be total_classes + 1 (for background). Steps per epoch are ready to 100 just if you want yous tin increase if you take access to higher computing resources.

The detection threshold is 90% that means all the proposals with less than 0.9 confidence will be ignored. This threshold is different than the one while testing an epitome. Look at the two images beneath for clarification.

iv. Setting up the CustomDataset form

The class below contains 3 crucial methods for our custom dataset. This class inherits from "utils.Dataset" which we imported in the 1st footstep. The 'load_custom' method is for saving the annotations along with the image. Hither we extract polygons and the respective classes.

polygons = [r['shape_attributes'] for r in a['regions']]

objects = [s['region_attributes']['proper name'] for south in a['regions']]

Polygons variable contains the coordinates of the masks. Objects variable contains the names of the respective classes.

The 'load_mask' method loads the masks every bit per the coordinates of polygons. The mask of an image is nothing but a list containing binary values. Skimage.describe.polygon() does the task for u.s.a. and returns the indices for the coordinates of the mask.

Earlier we discussed that you should not use more than one shape while annotating as information technology will get intricate when loading masks.

Although If yous want to use multiple shapes i.due east. circumvolve, ellipse, and polygon then yous volition need to change the load mask office as beneath.

def load_mask(self, image_id):

... ##change the for loop only

for i, p in enumerate(info["polygons"]):

# Get indexes of pixels inside the polygon and set them to 1

if p['name'] == 'polygon':

rr, cc = skimage.describe.polygon(p['all_points_y'], p['all_points_x'])

elif p['proper noun'] == 'circle':

rr, cc = skimage.describe.circle(p['cx'], p['cy'], p['r'])

else:

rr, cc = skimage.draw.ellipse(p['cx'], p['cy'], p['rx'], p['ry'], rotation=np.deg2rad(p['theta']))

mask[rr, cc, i] = 1...

This is one way you can load masks with multiple shapes but this is just speculation and the model will non notice a circumvolve or ellipse unless it captures a perfect circumvolve or ellipse.

5. Creating Train() function

The dataset that I imported from my drive is structured every bit:

dataset

--train

----21414.JPG

----2r41rfw.JPG

----...

----via_project.json --val

----w2141fq2cr2qrc234.JPG

----2awfr4awawfa1rfw.jpg

----...

----via_project.json

First, we will create an example of CustomDataset class for the preparation dataset. Similarly, create another instance for the validation dataset. And then nosotros will call the load_custom() method by passing in the proper name of the directory where our data is stored. 'layers' parameter is set to 'heads' here every bit I am not planning to train all the layers in the model. This will only train some of the top layers in the architecture. If you want you tin can set 'layers' to 'all' for training all the layers in the model.

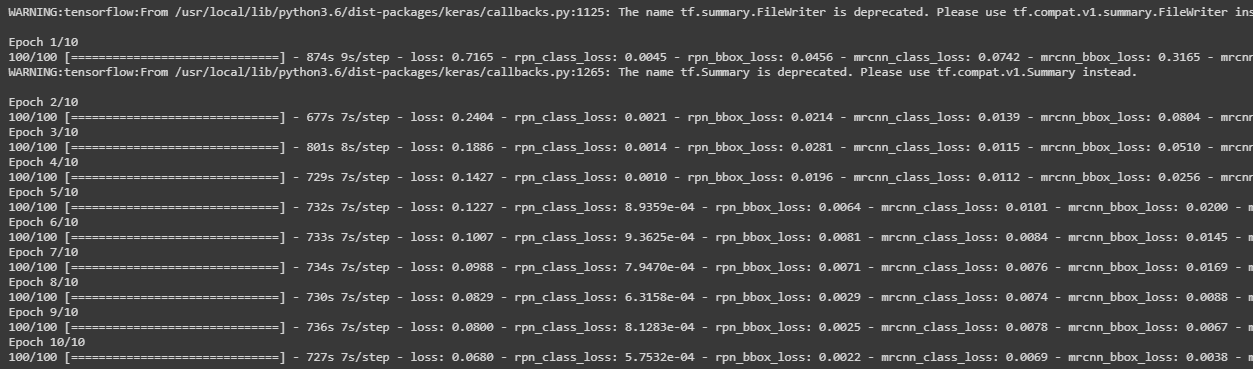

I am running the model for only 10 only as this tutorial is supposed to only guide y'all.

6. Setup before the preparation

This step is for setting up the model for training and downloading and loading pre-trained weights. You can load the weights of COCO or your terminal saved model.

The call 'modellib.MaskRCNN()' is the step where you lot can go lots of errors if y'all have not called the right versions in the 2nd section. This method has a parameter 'manner' which decides whether we desire to train the model or examination the model. If you want to test set 'way' to 'inference'. 'model_dir' is for saving the data while training for backup. And then, we download the pre-trained COCO weights in the next step.

Note: If you want to resume training from a saved signal and then you need to change 'weights_path' to the path where your .h5 file is stored.

7. Kickoff training

This pace should not throw whatever error if you accept followed the steps in a higher place and training should offset smoothly. Call up nosotros need to have Keras version 2.2.5 for this step to run mistake-free.

train(model) Note: If you get an mistake restart runtime and run all the cells again. It may be because of the version of TensorFlow or Keras loaded. Follow pace 2 for choosing the right versions.

Note: Ignore any warnings you lot get while training!

8. Testing

You lot can find the instructions in the notebook on how to exam our model one time grooming is finished.

import bone

import sys

import random

import math

import re

import time

import numpy equally np

import tensorflow every bit tf

import matplotlib

import matplotlib.pyplot as plt

import matplotlib.patches as patches

import matplotlib.prototype equally mpimg # Root directory of the project

#ROOT_DIR = os.path.abspath("/")

# Import Mask RCNN

sys.path.append(ROOT_DIR) # To discover local version of the library from mrcnn import utils

from mrcnn import visualize

from mrcnn.visualize import display_images

import mrcnn.model as modellib

from mrcnn.model import log %matplotlib inline # Directory to salve logs and trained model

MODEL_DIR = os.path.join(ROOT_DIR, "logs")

# Path to Ballon trained weights

# You can download this file from the Releases folio

# https://github.com/matterport/Mask_RCNN/releases WEIGHTS_PATH = "/content/wastedata-Mask_RCNN-multiple-classes/main/Mask_RCNN/logs/object20201209T0658/mask_rcnn_object_0010.h5" # TODO: update this path

Here, we define the path to our last saved weights file to run the inference on. Then, we define unproblematic configuration terms. Here, confidence is specified once more for testing. This is different from the one used in training.

config = CustomConfig() CUSTOM_DIR = os.path.join(ROOT_DIR, "/content/dataset/") class InferenceConfig(config.__class__): # Run detection on one prototype at a time

GPU_COUNT = i

IMAGES_PER_GPU = 1

DETECTION_MIN_CONFIDENCE = 0.seven config = InferenceConfig()

config.display()

# Device to load the neural network on. Useful if you're grooming a model on the aforementioned motorcar, in which example use CPU and leave the GPU for training. DEVICE = "/gpu:0" # /cpu:0 or /gpu:0 # Audit the model in training or inference modes values: 'inference' or 'training' TEST_MODE = "inference" def get_ax(rows=1, cols=1, size=16): ""Return a Matplotlib Axes array to be used in all visualizations in the notebook. Provide a primal signal to control graph sizes. Adjust the size attribute to control how big to render images""" _, ax = plt.subplots(rows, cols, figsize=(size*cols, size*rows)) render ax

# Load validation dataset

CUSTOM_DIR = "/content/dataset"

dataset = CustomDataset()

dataset.load_custom(CUSTOM_DIR, "val") # Must call before using the dataset

dataset.prepare()

print("Images: {}\nClasses: {}".format(len(dataset.image_ids), dataset.class_names))

At present we will load the model to run inference.

#LOAD MODEL

# Create model in inference mode with tf.device(DEVICE):

model = modellib.MaskRCNN(mode="inference", model_dir=MODEL_DIR, config=config)

Now loading the weights in the model.

# Load COCO weights Or, load the last model you trained weights_path = WEIGHTS_PATH

# Load weights

print("Loading weights ", weights_path)

model.load_weights(weights_path, by_name=Truthful)

Now, we are ready for testing our model on any paradigm.

#RUN DETECTION

image_id = random.choice(dataset.image_ids)

impress(image_id)

image, image_meta, gt_class_id, gt_bbox, gt_mask =\

modellib.load_image_gt(dataset, config, image_id, use_mini_mask=Imitation)

info = dataset.image_info[image_id]

impress("image ID: {}.{} ({}) {}".format(info["source"], info["id"], image_id,dataset.image_reference(image_id))) # Run object detection

results = model.detect([paradigm], verbose=one) # Display results

10 = get_ax(1)

r = results[0]

visualize.display_instances(image, r['rois'], r['masks'], r['class_ids'], dataset.class_names, r['scores'], ax=ax, title="Predictions") log("gt_class_id", gt_class_id)

log("gt_bbox", gt_bbox)

log("gt_mask", gt_mask) # This is for predicting images which are non present in dataset

path_to_new_image = '/content/dataset/test/unnamed.jpg' image1 = mpimg.imread(path_to_new_image) # Run object detection

print(len([image1]))

results1 = model.find([image1], verbose=1) # Brandish results

ax = get_ax(1)

r1 = results1[0]

visualize.display_instances(image1, r1['rois'], r1['masks'], r1['class_ids'],

dataset.class_names, r1['scores'], ax=ax, championship="Predictions1")

9. Colour Splash

For fun, y'all can try the beneath code which is present in the original implementation of Mask RCNN. This will convert everything grayscale except for the object mask areas.

You can call this part as specified beneath. For video detection, call the second function.

splash = color_splash(image, r['masks']) display_images([splash], cols=ane)

10. Further…

You can learn how Region Proposals piece of work from the latter cells of the notebook. Below are some of the interesting images that might take hold of your heart if you are curious about how the Region Proposal Networks piece of work.

Y'all may want to meet how Proposal classification works and how nosotros get our final regions for partitioning. This portion is also covered in the latter part of the notebook.

You can find the notebook comprising all of the lawmaking we saw in a higher place from GitHub here. You can connect with me on LinkedIn from here.

References:

- https://arxiv.org/pdf/1703.06870.pdf

- https://github.com/SriRamGovardhanam/wastedata-Mask_RCNN-multiple-classes

- https://github.com/matterport/Mask_RCNN

- https://engineering science.matterport.com/splash-of-color-instance-sectionalisation-with-mask-r-cnn-and-tensorflow-7c761e238b46

How To Create Ground Truth Data With Rcnn,

Source: https://towardsdatascience.com/mask-rcnn-implementation-on-a-custom-dataset-fd9a878123d4

Posted by: gloverfign1969.blogspot.com

0 Response to "How To Create Ground Truth Data With Rcnn"

Post a Comment